Improving Driver Engagement with AI

Enhancing Driver Engagement for L2, L2+, and L3 Features using Interactive AI with Positive Reinforcement

1. Introduction

While working on Level 2, Level 2+, and Level 3 automated driving systems, I contributed to a proof of concept (PoC) designed to enhance driver awareness systems. Conventional driver awareness estimators rely on physical indicators like eye movement and head position to gauge engagement. However, these systems may misclassify drivers who appear attentive visually but are cognitively disengaged, such as daydreaming or mentally distracted.

The goal of this PoC was to address both physical and cognitive engagement by integrating an AI-powered assistant, Avatar, into the existing driver monitoring framework. Avatar actively engaged the driver through conversational interactions, pulling data from localization and perception components to generate context-aware questions. For example:

“What color is the car in front of you?”

“Is it raining outside?”

Drivers’ responses confirmed not just visual engagement but also their mental connection to the driving task. Correct responses triggered positive reinforcement (e.g., “Thank you for staying alert!”), while incorrect or missing responses escalated alerts, starting with verbal prompts and progressing to haptic, visual, or text-based feedback.

I collaborated with user experience engineers, functional safety engineers, and HMI designers to shape the look and feel of this feature, including interface elements and feedback modalities.

2. Concept Overview

The Avatar AI Proactive Driver Engagement feature is designed to actively involve the driver in the driving task. The following steps describe the methodology and how it would appear within the vehicle HMI for a L2+ hands-off driving feature.

ADAS/Automated Driving feature initiation:

When automated driving feature is ready to be activated, the user requests the feature to be turned on

Figure 1. Driver Engages Automated Driving

2. Avatar Interaction Initiation:

• L2+ hands-off automated driving feature is active.

• When cognitive engagement needs reinforcement, Avatar triggers an interaction.

• Avatar asks a context-aware question based on localization and perception data.

• Example Question: “What color is the car in front of you?”

3. Driver Response:

The driver responds verbally, and the AI processes their response.

HMI Visual: An indicator shows the response is being analyzed.

Figure 2. Avatar Interaction During Hands-off Automated Driving

4. Feedback:

• Positive Feedback: If the response is correct, Avatar provides encouraging feedback, such as “Thank you for staying alert!”.

• Escalating Feedback: If the response is incorrect or missing, the system issues a verbal correction or escalates to haptic/visual alerts.

• HMI Visual: Feedback is displayed in text and audio, and the escalation status may be highlighted on the dashboard.

Figure 3. Driver Engagement Feature Provides Positive Reinforcement After Driver Provides Feedback

5. Continuous Monitoring:

• The system continues monitoring and dynamically adjusts interactions based on driver state.

3. Architecture Overview

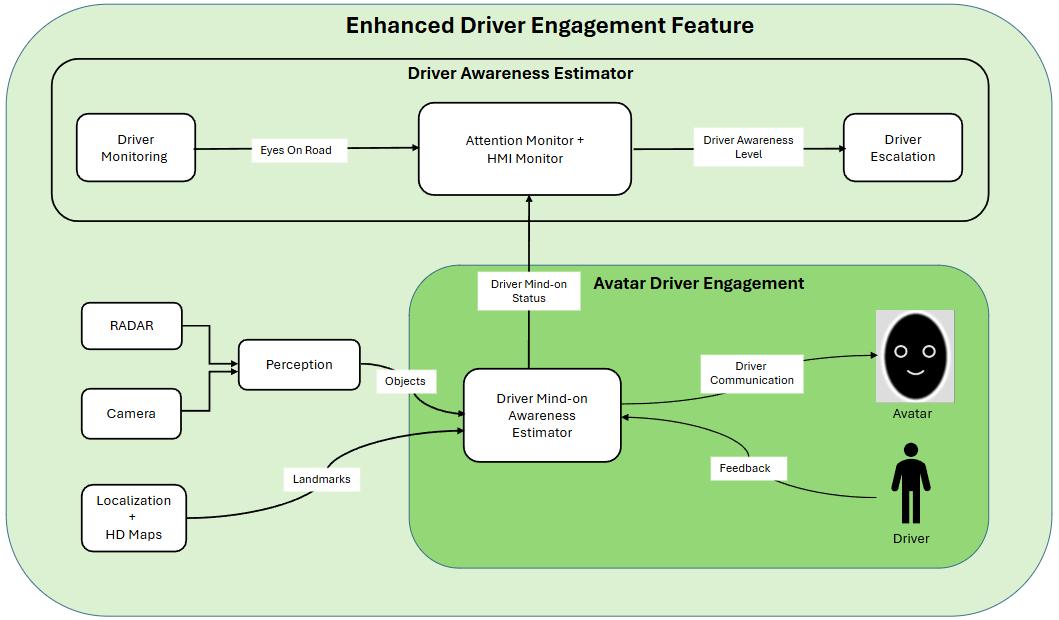

As previously mentioned, the driver engagement AI feature builds upon existing Driver Awareness, localization, and perception algorithms.This architecture showcases the integration of a driver awareness estimator and the Avatar Driver Engagement system, illustrating how these components interact to ensure both physical and cognitive driver engagement in a Level 2–3 automated driving system.

Figure 4. Enhanced Driver Engagement Architecture Diagram

High-Level Flow Summary

The Driver Monitoring System tracks physical engagement using internal sensors.

The Attention Monitor evaluates physical attentiveness and communicates with the HMI to inform the driver’s engagement state.

The Driver Mind-On Awareness Estimator combines physical and cognitive engagement assessments using data from perception, localization, and the driver monitoring system.

Avatar AI dynamically interacts with the driver, providing a proactive means to reinforce cognitive engagement through interactive questions and feedback.

Alerts are escalated as necessary using visual, haptic, and verbal feedback mechanisms.

This integrated architecture ensures the driver’s eyes and mind remain on the road, enhancing overall safety and readiness in semi-automated driving scenarios.

Driver Awareness Estimator (Upper Section)

This section represents the traditional driver awareness estimator's framework, focusing on monitoring physical engagement and escalating alerts when disengagement is detected.

Driver Monitoring:

Input: The system uses an internal camera to track head movement and eye gaze to ensure the driver is physically attentive.

Output: Feeds engagement metrics to the next stages of the estimator.

Attention Monitor + HMI Monitor:

Functionality: Combines driver engagement metrics with HMI feedback to determine the driver’s awareness level. The HMI visualizes this information in real time on the cluster or infotainment system, such as an “Engaged” or “Distracted” status.

Driver Awareness Level:

Processing: This module evaluates the driver’s state based on attention monitoring and determines whether intervention is necessary.

Driver Escalation:

Functionality: Triggers appropriate alerts (visual, haptic, or text-based) to re-engage the driver if disengagement is detected.

Avatar Driver Engagement (Lower Section)

This section highlights the enhancement provided by the Avatar AI system to ensure cognitive engagement, going beyond traditional physical metrics to actively involve the driver in the driving task.

Perception (Inputs from RADAR and Cameras):

Functionality: Gathers data on objects (e.g., nearby vehicles, pedestrians) in the environment using RADAR and camera sensors.

Output: Provides the contextual information needed to generate questions, such as object attributes or positions.

Localization + HD Maps:

Functionality: Supplies environmental and geographic context, such as road type, landmarks, and weather conditions.

Output: Enables Avatar to tailor questions relevant to the driving scenario, e.g., “Are we near the exit for Main Street?”.

Driver Mind-On Awareness Estimator:

Core Functionality: Combines inputs from perception and localization with physical engagement metrics from the driver monitoring system to assess whether the driver is both physically and mentally engaged.

Driver Mind-On Status: Determines if the driver is cognitively engaged and communicates this information to the Avatar system.

Avatar (Interactive AI):

Driver Communication: Interacts with the driver by asking context-aware questions about the environment. For example: “What color is the car in front of us?”

Feedback: Provides verbal and visual feedback based on driver responses. Correct answers result in positive reinforcement (e.g., “Thank you for staying alert!”), while incorrect or absent answers escalate warnings to ensure cognitive engagement.

Driver Feedback Loop:

Integration: Ensures the driver remains engaged through a continuous feedback loop, dynamically adjusting the interaction based on their responses and overall awareness state.

4. Implementation

The PoC system featured a dynamic feedback loop:

Avatar assessed driver responses in real-time.

Correct responses maintained normal operation, while incorrect or absent responses triggered escalating alerts.

Demo Development:

Created a MATLAB-based demo to simulate the system in various scenarios, including:Driver responding correctly to Avatar’s queries.

Driver failing to respond or providing incorrect answers.

Escalating alerts and feedback strategies.

The demo provided stakeholders with a tangible representation of the concept and enabled early validation of engagement strategies.

5. Potential Enhancements to Level 2, Level 2+, and Level 3 Automated Driving Features

Level 2:

• Proactively ensures driver attentiveness by bridging the gap between physical and cognitive engagement, reducing false engagement signals in shared driving responsibilities.

• Helps mitigate risks associated with driver over-reliance on ADAS systems in partially automated driving.

Level 2+:

Supports more complex driving scenarios (e.g., highways with adaptive cruise control and lane centering) by dynamically adjusting driver interaction based on road and environmental conditions.

Provides a smoother transition between driver and system control, preventing disengagement during prolonged use of semi-autonomous features.

Level 3:

Ensures the driver remains ready to take control when required by actively engaging their cognitive processes during periods of autonomous driving.

Enhances trust and safety by reducing risks of driver out-of-the-loop states through constant, non-intrusive interactions.

6. Summary and Value Proposition

This proof of concept reinforced the importance of addressing cognitive engagement in driver monitoring systems, bridging the gap between physical and cognitive driver engagement. By collaborating with user experience engineers, product owners, and technical experts, I successfully designed a feature that leverages innovative AI to enhance safety and usability. The process emphasized the value of cross-functional teamwork and iterative feedback in creating user-centric solutions for safety-critical systems. My ability to innovate, collaborate, and align technical solutions with safety standards like ISO 26262 and SOTIF highlights the impact I can make in advancing automated driving technologies, ensuring safer and more engaging driver experiences.